This is a continuation of Data Loader in Salesforce Part I in Data Management Salesforce.

1. Updating Data to Salesforce Using Data Loader

We create the updated file with compulsory ID fields to map data correctly.

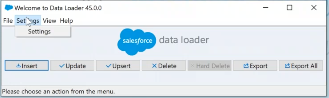

Step 1. Click on UPDATE,

Step 2. Select the target object and browse the update file.

Step 3. Map the columns to be updated properly. For example, the New price column is to be updated to the Price column. To avoid manual matching in the future you can click on save mapping as well.

Step 4. Browse the location folder for success and error files and Finish!

2. Which is the column/field, on which Update activity is relying?

ID field.

3. Upserting Data to Salesforce Using Data Loader

What is UPSERT?

UPdate + inSERT = UPSERT. It means, by using one command/button and using one file, we can do insert as well as update with different records. This kind of work is useful in real-time project scenarios when you don’t have a chance to check for old or new records. Thereby insert new and update old records in one go. It compares ID fields to make updates/inserts.

UPSERT requires External ID as update makes use of Salesforce ID

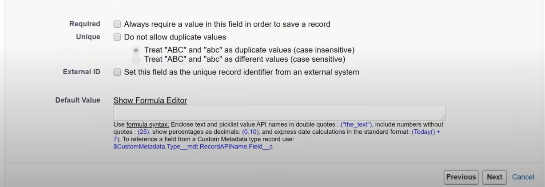

External ID can be created while creating a custom field by selecting a checkbox. It’s specifically designed for integration to contact Salesforce applications from an external system.

For example, Salesforce records are being connected with the SAP/.NET platform. It works only with the Upsert command (not insert/update) allowing you to work with other external systems’ record Ids instead of using Salesforce ID.

Fields marked with External ID get indexed and are included in Global Search. (Salesforce record ID which we see in URL, is for internal purposes). 25 such fields are possible per Salesforce Object.

4. What types of fields e can create as External ID fields?

Text, Number, and Email fields.

5. What is the best practice for using an External Id?

To also mark the field as unique and case sensitive.

Steps to Upserts in Data Loader

Step 1. Click on Upserts

Step 2. Select the object and browse the upsert file.

Step 2a. Choose the field having the external ID, to use it for data matching. Next and Finish!

Hereby the record is compared making use of an External Id.

An example of the case is when we receive a file for let’s said an oracle system to be updated in our salesforce system. The oracle file’s Id field can be made as External Id in Salesforce that can be compared to check for new and old records whenever we need to update ( add new and update old records) in the Salesforce database.

6. Data Deletion Using Data Loader in Salesforce

How many minimum columns do we need for the Delete action?

1 column, i.e ID. We can delete records with the Id field alone. So the file for deletion contains ID fields columns

Where to find ID?

Easily from the URL as in URL/ID

Step 1. Click on Delete.

Step 2. Select the object and browse the deletion file.

Step 3. Map field in file to that of the data table.

Step 4. Finish with browsing location of success and error files.

You receive a warning to ask if you want the deletion to happen and voila.

Difference between Delete and Hard Delete?

Data form Delete stays in the Recycle bin for a minimum of 15 days. While Hard Deleted data bypasses the Recycle Bin and gets permanently deleted.

Thereby Hard Delete stays deactivated in the Data Loader window. It can be activated via Manage Users: System Administrator settings (enable bulk API Hard delete) though not advised. Preferably used in the Sandbox environment for sample and testing data projects.

7. Can we bypass a mandatory field during the data loading?

It’s not possible and Salesforce will throw an error!

8. Can we deactivate the Trigger as we don’t want them to work on some specific records which we are loading in DL?

(Deactivation of trigger not advised ON PROD as we are removing a business logic on production by that.)

We can bypass this by adding one more column while we are loading the data with DL. Example: “DataFromDataLoader” with binary logic: Yes/No. And further, add a rule to check for this value in the automation.

9. Working with the Error file

Remove the last Error Column. Modify the data that caused an error and re-upload the same file.

Benefits of Error File: No need to separate successful and failed records.

10. Types of Record ID we have in Salesforce

We have two types broadly.

18 letter Id: This Id is used externally by salesforce for example when data is exported through Data Loader. This is made Case insensitive to match case insensitive platforms outside Salesforce.

15 letter Id: As for internal use, Salesforce deploys 15 letter Ids as such used in the URL. This is Case sensitive. Example:salesforce.com/0056g000062V3oa

11. While coding, within Salesforce, what digit ID I will get?

15 Digit.

12. Again to send data to Oracle, How to convert 15 digit ID to 18 digit ID?

Using CASESAFEID(id) function.

This 0056g000062V3oa (case-sensitive) converts to 0056g000062V3oaKWs (case-insensitive).

To capture the 18-character non-case sensitive Id for records in a field, utilize the CASESAFEID() function in a Formula field.

1. Navigate to the Custom Fields and Relationships of the Object:

Setup> Customize> Object> Fields> Custom Fields and Relationships> New

2. Select Formula as the Field Type

3. Name the Field and select Text as the Return Type

4. Input the following Formula into the Formula Editor:

CASESAFEID(Id)

13. Drawbacks of Data Loader

If there are duplicate records in the file to be uploaded, the data loader itself is not capable of getting rid of duplicate records.

To resolve we need to identify a field that remains unique for every record in the database, like employee Id, Email Id, Transaction Number, etc. and we create the field to be unique in the Salesforce database.

Other than Data Loader in Salesforce we also have a not-so-famous tool Data Import Wizard for similar uses.

14. Differentiate Data Loader vs Data Import Wizard

| Data Loader | Data Import Wizard |

|---|---|

| designed for technical users | designed for less-technical users (like business users) |

| Can process up to 50,00,000 (50 lac) records at a time and can be used for complex imports of large size | Used for smaller and simpler imports of up to 50,000 records |

| Can work with any Object standard as well as any custom. | Can only import data of Account, Contact, Leads, Solution, Campaign Member, and Custom Objects. |

| Can do insert,delete, hard delete,update, and upsert | Can only insert, update, and upsert. |

| Can be scheduled to automate via external knowledge. | Can not be scheduled. |

15. Data Export Salesforce

We also have a Data Export option available in salesforce to export as well as schedule backup from all or selective objects.

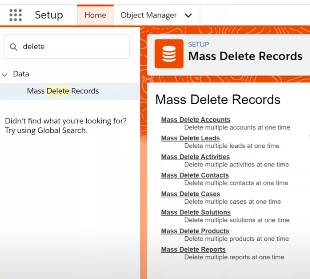

16. Mass Delete Records in Salesforce

Another point of notice in the Data Management scope is the Mass Delete Record option. This Mass Delete Record option comes in handy for cases when Data Loader can’t delete the records like Reports in Salesforce.

17. Can we import history objects in Salesforce using a data loader?

History cannot be created since objects like AccountHistory, CaseHistory, OpportunityHistory, and ContactHistory don’t support create/update operations. If we need that data we should migrate it into a Read Only Custom Object.

What more cannot be migrated using data loader?

- Custom Metadata being a metadata record.

- Platform Events cannot be migrated.

- history of the object are automatically created when any changes are made by user on record and don’t have creatable and updatable attributes.

18. When to use Soap API (default mode) vs bulk api in data loader

Using SOAP API ,we do not need to write any code to use Data loader.By default Data Loader is using the SOAP-based API.

Salesforce officially recommends using the Bulk API when we are processing more than 250K records. You might also use this when you are having issues hitting API call limits. The Bulk API is optimized to load or delete a large number of records asynchronously. It is faster than the SOAP-based API due to parallel processing and fewer network round-trips.

Bulk API does not allow zip file size more than 10 MB.

very nice article.keep it up

Hi smriti. Thank you for the kind words. Feel free to join our telegram community.

hi,very good collection for interview purpose.

it would be more helpful if i could copy the questions.

Hey pooja… had to put that to avoid content copying and plagiarism. Feel free to jst save the page or print 🖨